The problem

I work on UML diagrams a lot as a part of my work and my tool of choice for a while has been PlantUML. Easy to write, easy to render and easy to share. My problem was that I frequently hit the UML version of writer’s block, aka laziness. Despite my familiarity with the syntax and capabilities of PlantUML, there are times when I struggle to muster the motivation needed to create new diagrams. This seemed like a problem tailor-made for GenAI.

Requirements

- Should be easy to make over the long weekend [Good friday].

- Shouldn’t spend time crafting the UI.

- Should be easy to deploy.

- Shouldn’t burn a hole in my pocket.

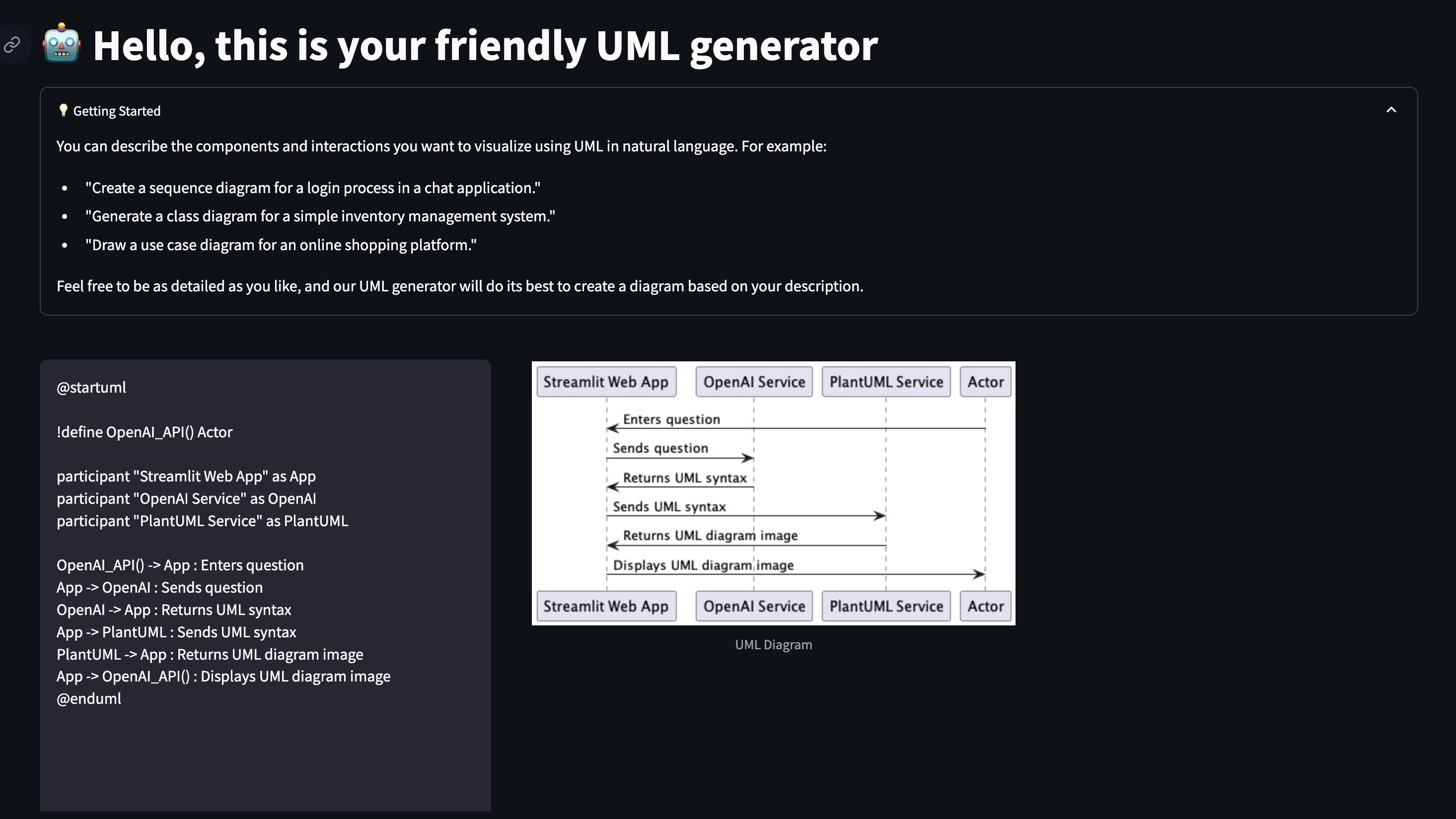

Why tell when you can show?

https://app.chatuml.online is now live 🎉 [atleast untill I have chatGPT credits]

Prompt :

Generate a UML diagram for the following flow :

User enters a question, which is forwarded to openAI,

which generates a UML diagram and the diagram is rendered using the platUML jar

and this app is built using streamlit and deployed using fly.io

The result :

The build

I’ll walk you through the practical steps involved in creating an AI-powered UML generator. I’ll cover essential aspects such as cost considerations, frontend choices, deployment strategies, prompt formulation, and AI model integration.

Front end

There were quite a few front end choices

- Build it using rails with a starter template like bullet train

- Build it using v0 vercel

- Use streamlit

I chose streamlit as it was the easiest to get started with and it comes with a lot of bells and whistles and guides to build a LLM powered chat application. In no time, I could spawn a chat app with history and connect to openAI and use my prompts. While streamlit comes with a lot of constraint on what can be customized, to build out the MVP of an idea, it seemed like the best choice. Getting started with a chat application on streamlit was as simple as this :

import streamlit as st

from openai import OpenAI

st.title("ChatGPT-like clone")

# Set OpenAI API key from Streamlit secrets

client = OpenAI(api_key=st.secrets["OPENAI_API_KEY"])

# Set a default model

if "openai_model" not in st.session_state:

st.session_state["openai_model"] = "gpt-3.5-turbo"

# Initialize chat history

if "messages" not in st.session_state:

st.session_state.messages = []

# Display chat messages from history on app rerun

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Accept user input

if prompt := st.chat_input("What is up?"):

# Add user message to chat history

st.session_state.messages.append({"role": "user", "content": prompt})

# Display user message in chat message container

with st.chat_message("user"):

st.markdown(prompt)

** This is not a sponsored post.

The prompt

prompt = (f"Given the software engineering problem described below, generate a solution strictly in PlantUML diagram syntax. "

f"Ensure the output is fully compliant with PlantUML syntax and conventions, without any additional text or explanation. "

f"Try to first generate a sequence diagram and then try other forms UML if no form is explicitly mentioned"

f"Problem Description: '{user_input}'\n\n"

f"PlantUML Diagram:\n")

response = client.chat.completions.create(

model="gpt-4-1106-preview",

messages=[{

'role': 'user',

'content': prompt

}],

stop="@enduml"

)

I wanted to mainly ensure that output is only plantUML content, I added that condition explicitly in the prompt and also added stop paramter to ensure the generated content is halted after @enduml.

Choosing a model

Claude vs ChatGPT 4

I got better results with ChatGPT 4 Turbo compared to Claude, so decided to stick to openAI

Generating the UML diagram

plantUML provides a nifty .jar file that can be used to generate images.

Deployments

fly.io is my go to deployment option as they support multiple regions and very easy deployments for Docker containers, considering the complexity of the application being mainly

- A python app

- A plantUML jar

and the Dockerfile being pretty straight forward

# Use an official Python runtime as a parent image

FROM python:3.8-slim-bullseye

# Set the working directory in the container

WORKDIR /usr/src/app

# Install Java for PlantUML

RUN apt-get update && \

apt-get install -y openjdk-11-jre-headless graphviz && \

apt-get clean && \

rm -rf /var/lib/apt/lists/* && \

pip install --no-cache-dir pyparsing pydot

# Copy the current directory contents into the container at /usr/src/app

COPY . .

COPY .streamlit/ ./.streamlit/

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Make port 8501 available to the world outside this container

EXPOSE 8501

# Define environment variable

ENV NAME World

# Run app.py when the container launches

CMD ["streamlit", "run", "app.py"]

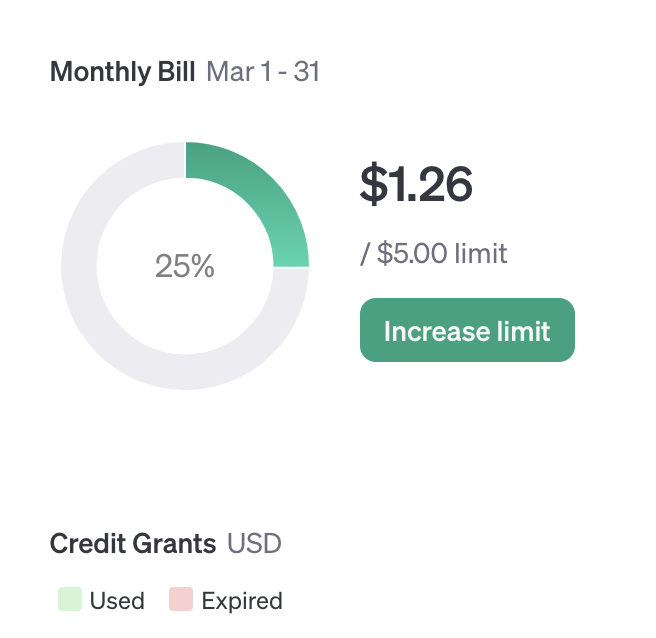

Cost

| Category | Price |

|---|---|

| Domain | 10c |

| Hosting | Free |

| AI | $5 |

Capping openAI cost :

OpenAI lets you set a budget and so far I have used around ~ 1.26$ of the total 5$ and considering the output is constrained, I expect this 5$ to last a while.